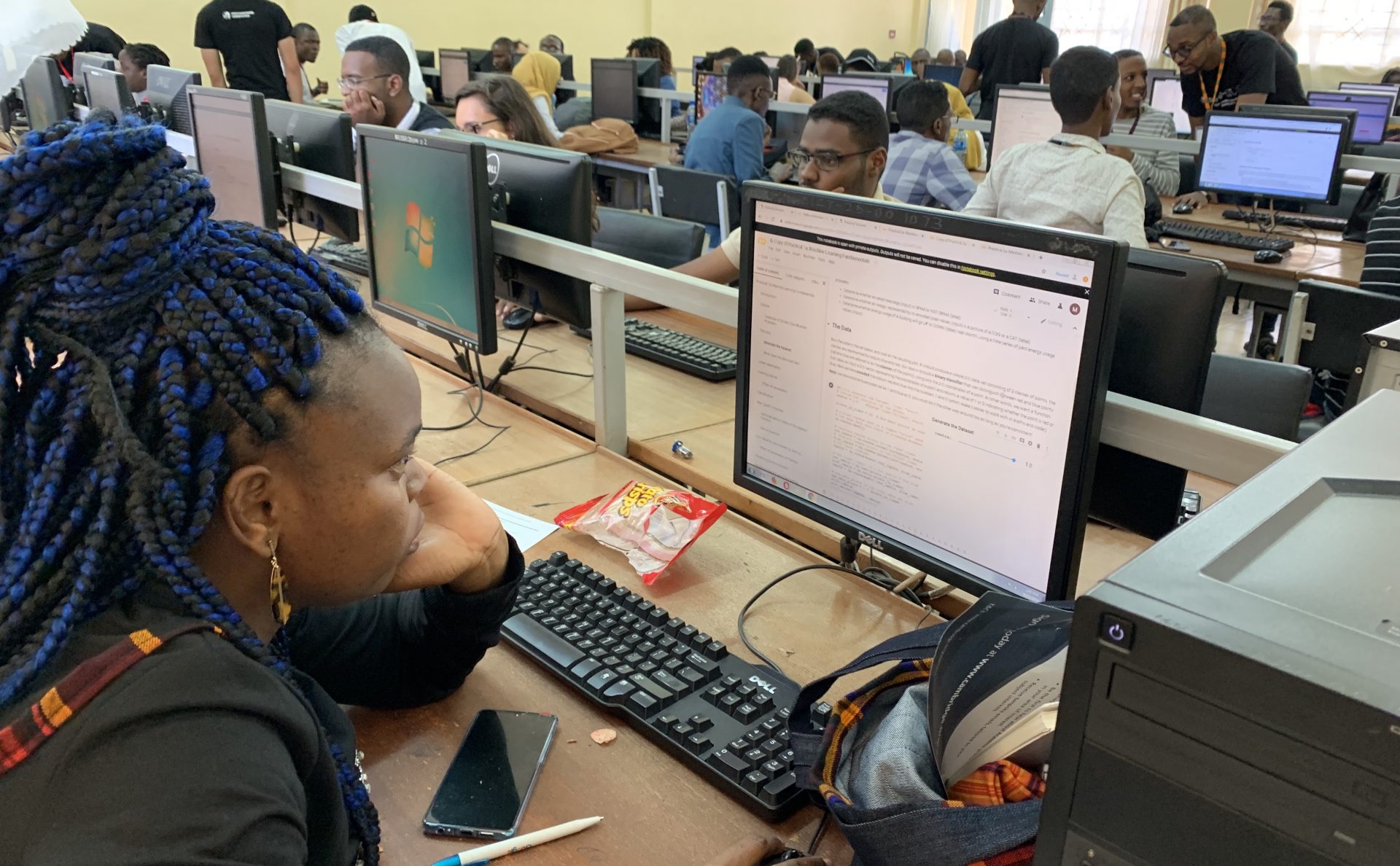

These sessions give hands-on experience and opportunity to learn new coding skills and to work in small groups. Details coming soon.

2022 Topics

Introduction to ML using Jax

Description

In this tutorial, we will learn about JAX, an upcoming machine learning framework that has taken deep learning research by storm! JAX is praised for its speed, and we will learn how to achieve these speedups, using core concepts in JAX, such as automatic differentiation (Grad), parallelization (pmap), vectorization (vmap), just-in-time compilation (JIT), and more. We will then use what we have learned to implement Linear Regression effectively while learning some of the fundamentals of optimization.

Aims/Learning Objectives:

- Learn the basics of JAX and some core transforms – jit, grad, vmap and pmap.

- Learn how to build and optimize a simple Linear Regression model using JAX.

- Learn the basics of JAX libraries – Optax (for optimization) and Haiku (for neural networks).

Prerequisites:

- Basic knowledge of NumPy.

- Basic knowledge of functional programming.

Array Algebra

Description:

In this tutorial, we’ll look at one of the cornerstones of modern deep learning: array programming. Think of this tutorial as a gym or obstacle course for working with arrays. We’ll help you gain intuition about multidimensional arrays (“tensors”), so you can better understand transposing, broadcasting, contraction, and the other “algebraic moves” that make array programming both frustrating and rewarding. They’ll be practical examples as well as theoretical exercises to help you develop your understanding.

Aims / Learning objectives:

- Understand the nature of multidimensional arrays, their shape, and their axes.

- Learn about the many ways arrays can be combined and transformed.

- Know how these operations are exposed in common frameworks.

- Recognize common patterns of array programming in deep learning.

- Be more confident in writing new array code and understanding existing code.

Reinforcement Learning

Description:

In this tutorial, we will be learning about Reinforcement Learning, a type of Machine Learning where an agent learns to choose actions in an environment that lead to maximal reward in the long run. RL has seen tremendous success on a wide range of challenging problems such as learning to play complex video games like Atari, StarCraft II and Dota II. In this introductory tutorial, we will solve the classic CartPole environment, where an agent must learn to balance a pole on a cart, using several different RL approaches. Along the way, you will be introduced to some of the most important concepts and terminology in RL.

Aims/Learning Objectives:

- Understand the basic theory behind RL.

- Implement a simple policy gradient RL algorithm (REINFORCE).

- Implement a simple value-based RL algorithm (DQN).

Prerequisites:

- Some familiarity with Jax – going through the “Introduction to ML using Jax” practical is recommended.

- Neural network basics.

Graph Neural Networks

Description:

In this tutorial, we will be learning about Graph Neural Networks (GNNs), a topic which has exploded in popularity in both research and industry. We will start with a refresher on graph theory, then dive into how GNNs work from a high level. Next we will cover some popular GNN implementations and see how they work in practice.

Aims/Learning Objectives:

- Understand the theory behind graphs and GNNs

- Implement GCNs

- Implement GATs

- See applications on different datasets

Prerequisites:

- Some familiarity with Jax (we will be using jraph) – going through “Introduction to ML using Jax” is recommended.

- Neural network basics.

- Graph theory basics (MIT Open Courseware slides by Amir Ajorlou).

- We recommend watching the Theoretical Foundations of Graph Neural Networks Lecture by Petar Veličković before attending the practical. The talk provides a theoretical introduction to Graph Neural Networks (GNNs), historical context, and motivating examples.

Deep Generative Models

Description:

In this practical, we will investigate the fundamentals of generative modeling, which is a machine learning framework that allows us to learn how to sample new unseen data points that match the distribution of our training dataset. Generative modeling, though a powerful and flexible framework–which has provided many exciting advances in ML–has its own challenges and limitations. This practical will walk you through such challenges and will illustrate how to solve them by implementing a Denoising Diffusion Model, which is the backbone of the recent and exciting Dalle-2 and Imagen models that we’ve all seen on Twitter.

Aims/Learning Objectives:

- Understand the differences between generative and discriminative modeling.

- Understand how Bayes’ rule and the probabilistic approach to ML is key to generative modeling.

- Understand the challenges of building generative models in practice, as well as their solutions.

- Understand, implement and train a Denoising Diffusion Model.

Prerequisites:

- Familiarity with Jax – going through the “Introduction to ML using Jax” practical is recommended.

- Basic linear algebra.

- Neural network basics.

- Basic probability theory (e.g. what a probability distribution is, what Bayes’ rule is)

- (Suggested) Attend the Monte Carlo 101 parallel.

Transformers & Attention

Description:

The transformer architecture, introduced in the 2017 paper Attention is All You Need, has significantly impacted the deep learning field. It has arguably become the de-facto architecture for complex Natural Language Processing (NLP) tasks. It can outperform benchmarks in various domains, including computer vision and reinforcement learning. In this practical, we will introduce attention in greater detail and build the entire transformer architecture block by block to see why it is such a robust and powerful architecture.

Aims/Learning Objectives:

- Learn how different attention mechanisms can be implemented.

- Learn and create the basic building blocks from scratch for the most common transformer architectures.

- Learn how to train a sequence-sequence model.

- Create and train a small GPT-inspired model.

- Learn how to use the Hugging Face library for quicker development cycles and train your own chatbot intent model

Prerequisites:

- RNN sequence-sequence methods.

- Basic linear algebra.

Bayesian Deep Learning Practical

Description:

Bayesian inference provides us with the tools to update our beliefs consistently when we observe data. Compared to the more common loss minimisation approach to learning, Bayesian methods offer us calibrated uncertainty estimates, resistance to overfitting, and even approaches to select hyper-parameters without a validation set. However, this more sophisticated form of reasoning about our machine learning models has some drawbacks: more involved mathematics and a larger computational cost. In this practical, we will explore the basics of Bayesian reasoning with discriminative models. You will learn to implement Bayesian linear regression, a powerful model where Bayesian inference can be performed exactly. We will then delve into more complex models like logistic regression and neural networks, where approximations are required. You will learn to implement “black box” variational inference, a general algorithm for approximate Bayesian inference which can be applied to almost any machine learning model.

Aims/Learning Objectives:

- Understand the tradeoffs of Maximum Likelihood vs fully Bayesian learning

- Implement Bayesian linear regression

- Understand the challenges of Bayesian inference in non-conjugate models and the need for approximate inference

- Implement “black box” variational inference

- Understand the tradeoffs of different methods for approximate Bayesian Inference, e.g. variational inference vs Monte Carlo methods

Prerequisites:

- Familiarity with Jax

- Basic Linear Algebra

- Basics of Bayesian inference (here is a 15 min video on the topic [YouTube]) and / or Bayesian Inference Talk from the Indaba

- Recommended: Attend the Monte Carlo 101 parallel.

Bayes theorem, the geometry of changing beliefs

Getting Help

There will be several tutors around during the session to help with the sessions to help you move through the practicals. Helping each other is part of the Indaba spirit. You can do this using the #practicals channel on Slack.